Introducing the new generation of serverless technology for Node.js, efficient and cold start to zero with hole.build.

Managing servers with a complex infrastructure a professional team focused only on monitoring and consuming several hours to decide how to scale and support large peaks in access and use of the application's APIs, has always been the problem to deal with high availability and fast growth projects.

Over time, several technologies and standards have been created to deal with this and they are one of the most critical things of a product when not well thought out and orchestrated can be a big headache for the rapid growth of a company but it requires professional demand qualified staff for monitoring and security. At the beginning of a small startup this can be a big cost because they need to grow quickly, they need to worry about their product, validate, win over their first customers and start selling.

Maintaining an infrastructure, monitoring and servers team to maintain the product can be very expensive, paying for services that are not used or are idle in times of low access can impact the company's balance sheet.

A few years ago, the "serverless" (FaaS) movement and technologies began to emerge, with a view to solving these types of problems:

- auto-scaling,

- scaling to down,

- zero servers,

- without complex infrastructure,

- pay only for resources when used,

This is beautiful and it looks like the best of all worlds to start building the product on top of that, but with that it came with a main disadvantage: performance, functions that are not executed with high frequency can suffer a higher response latency than the code being runs continuously on a server.

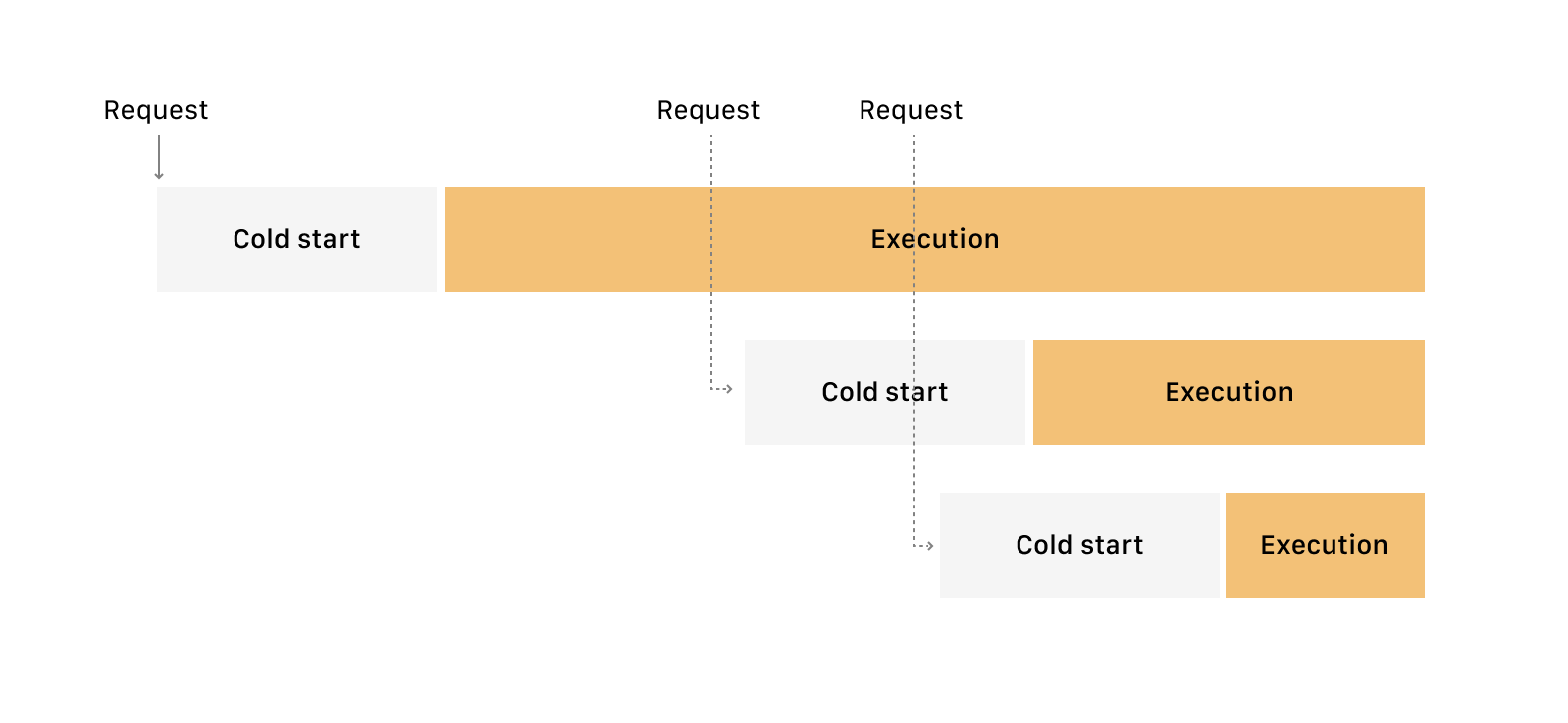

On other serverless platforms, when a request arrives, the platform provisions a container with its function runtime, there is a waiting time until its function can actually start to be executed and process the request, called a cold start. Your container is kept on "hold" for some time so that can process another request but without cold start, when new requests arrive and some of these containers are already occupied, other containers will have to be sized to process the new requests starting with the cold start.

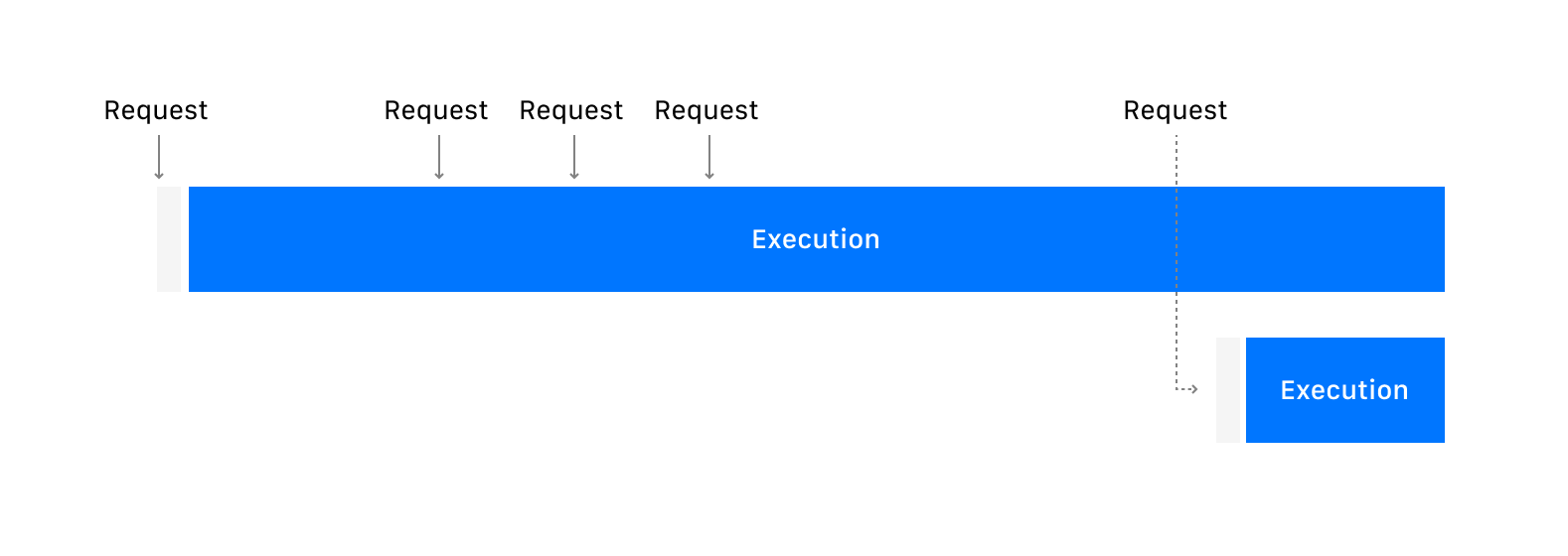

At Hole we built our technology to solve some of the main problems of serverless: performance, security, monitoring and debuging. Our functions are executed with cold starts to almost zero, we limit and add more layers of security in the environments of execution of the function, we show metrics of requests made successfully and failed in more details and insights on the performance of your code. In addition to improving serverless technology, we are very concerned with the experience of using the technology, console, design and friendly docs.

Our functions can be performed with cold starts to almost zero and functions can be configured to handle more than one asynchronous request increasing the limits of provisioning your function. You can read more about how our technology works in our documentation.

These are some crucial points that we are attacking but we want to improve even more how companies interact and work with serverless technologies is just the beginning, and we have many things that we want to show. It will be a long journey and we are excited to share our learnings and thoughts as we progress.

Today, we are starting to accept teams and companies for our private alpha. If you are interested in joining early and influencing the direction of Hole, sign up here and follow us on Twitter.